- see also in Swedish the project churchyard

- Riksarkivet SBL projekt på drift

- skuggbackloggar RAÄ / Riksarkivet / KB / DIGG

Update 2022-apr: Europeana now have a bugreporting system and we get unique id:s this issue has id EFS-1261 se also GITHUB

Latest update 2022-may-1 Europeana dont see this as urgent --> Wikipedia will not link Europeana as quality is to bad ;-(

Latest update 2022-may-1 Europeana dont see this as urgent --> Wikipedia will not link Europeana as quality is to bad ;-(

- painter Carl Larsson = Wikidata Q187310

- photographer Carl Larsson = Wikidata Q5937128

- County Museum of Gävleborg has estimated they have 1 million objects of this photographer --> I guess they will end up in Europeana as artist "Carl Larsson"

- Another example

- Jenny Lind

- Europeana person/148113

What should have been done is using unique persistent identifiers see how Swedish Runic researcher understood that year 1750, 250 years ago link

|

| The painter Carl Larsson agent/base/60886 were most objects displayed has nothing to do with the painter |

Can we trust Europeana metadata or is it fake metadata?

- Why cant a network like Europeana deliver good quality?

- Why is no one caring?

- en:Wikipedia did see T243764

- are topdown networks like Europeana not suited for new technologies like LinkedData ?

- Europeana did a prototype 2012 and today 2020 we see no result why? instead en:Wikipedia takes an active decision that the quality is too bad to link Europeana....

- is Europeana to academic? speaking about RDF instead of quality assure data delivered and miss skills communicating basic things like artist A in your museum needs to be explained its same as artist B in Wikipedia...

- are museums in Europe not skilled enough?

- in Sweden I see its the same people working with museums and linked data since 2012 and no one care that they don't deliver. Why?

Example how we in Sweden built a semantic layer called UGC-hub "user-generated content" in 2012 and it has < 20 properties compare Wikidata > 7000 see Jupyter Notebook checking status of Swedish K-samsök/kulturnav - no users using it...

- nearly no semantic...

- new technologies needs new skills - I guess everyone agrees about that but we dont see that with museums.... why?

Metadatadebt - the cost of getting your data usable because of lack of good metadata.

This is an example of Metadatadebt because of text strings matching of entity names --> the result is a database with very bad search precision and a lot of noice and not being trustworthy. One reason of having good linked data is to uniquely identify a person/place/organisation/concept also if the names are the same. Not caring/understanding this makes the data more or less unusable and I as an end user cant understand what is presented. In this case its a persons givenname "Carl Larsson"

that is assumed to be unique. As both Carl (also spelled Karl) and Larsson is on the list of the most common given names and surnames in Sweden its like asking for problems not using Linked data and the 5 star open data i,e, link your data to other data to provide context..... NOT send text strings...

|

| Pictures from 5stardata.info |

The cost of very bad metadata and creating new #fakemetadata

Other websites dont link Europeana:

- en:Wikipedia with 112 Billion yearly views could with two lines of code add 160 000 links to Europeana using the links in Wikidata but decided not to do that because of lack of quality in Europeana see T243764

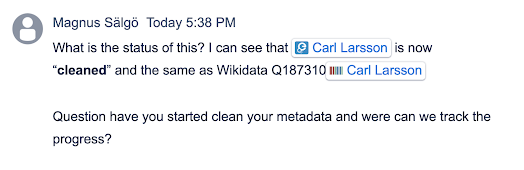

- interesting is that this has been reported to Europeana 6 jan 2020 but we cant track the Europeana actions. Compare how a modern and successful project like Wikidata has workboards and you can track/comment/subscribe on changes

|

Quality issues Europeana T243764 --> decision dont link them 112 B yearly page views en:Wikipedia as we use Linked data it can easily scale to all Wikipedias and 254B views in > 300 languages Wikidata Change Stream How Google in 20 minutes reads the Wikidata change stream add this metadata to its knowledge graph deliver a better product --> tweet |

Europeana <-> Wikidata

I am a big fan of Linked data and Wikidata and started to link a lot of Swedish institutions to get added value. The latest work I did was adding to Wikidata 160 000 connections with Europeana Collections see links below the problem is that a person as Carl Larsson = Wikidata Q187310 is mixed with a photographer Carl Larsson = Wikidata Q5937128 that Swedish museum Gävleborgs Länsmuseum has more than 1 000 000 items from and many are uploaded to Europeana.

bigger picture / Europeana link and issue reported 2020 jan 6 T240809#5777845

I guess the reason we get this problem in Europeana is:

- lack of entity management in Europeana

- Items that are connected at the Swedish County museum Gävleborg are translated to text Carl Larsson when uploaded to Europeana

- Europeana people then does a big mistake and text match everything called Carl Larsson and believes that is the same person

- lack of feedback/error tracking

- Europeana miss basic change management this error was reported early 2020 see task T240809#5777845 and we have got no helpdesk id yet or action plan.

- not having a network of museums speaking with each other and care about quality in Europeana

- compare en:Wikipedia that reacted and in 2 weeks decided that we cant link Europeana as the quality is not good enough see T243764

- The cost of bad metadata: not getting linked 160 000 times from one of the biggest websites with > 112 Billion yearly views

Quality issues T243764 |

| Carl Larsson agent/base/60886 |

|

| Good metadata is converted to text strings and then Europeana start guessing and adds new errors |

Background how Wikidata and LinkedData scale

As we add this connection Europeana <-> Wikidata we can easy also add links from different language versions of Wikipedia to Europeana e.g.

- Wikidata Carl Larsson is Q187310

- Wikidata connects 51 Wikipedia language versions e.g.

- Swedish sv.wikipedia:Carl_Larsson

- Bulgarian bg.wikipedia.org:Карл Ларсон

- English en.wikipedia:Carl_Larsson

- Chinese zh.wikipedia:卡爾·拉森

- etc...

- Wikidata also connect > 4000 external sources saying same as

- Carl Larsson Q187310 is same as

- Swedish National Archives SBL 11035

- Swedish Nationalmuseum 3877

- Kulturnav 499ecba4-945b-4cd1-88a2-40df6bda5d47

- Europeana agent/base/60886 --> 578 objects

- etc...

- As we have this tight connection Wikipedia article Wikidata we have data driven templates ==> that by adding a template we can add links to external sources rather easy and fast just with 2 lines of code we change > 1 million pages......

- Example

- Authority template adds links at the bottom of an article

- Russian Wikipedia use it on > 237 000 pages

- Spanish wikipedia on > 1 401 486 pages

- Swedish wikipedia on > 82 000 pages

- If we combine Wikipedia pages with Authority template and the Europeana property set in Wikidata we get how many pages will be changed if we add the Europeana property to this Authority template

- Russian Wikipedia and Europeana > 20 000 pages

- Spanish Wikipedia and Europeana > 34 800 pages

- Swedish Wikipedia and Europeana > 11 100 pages

How this looks in Russian Wikipedia for Carl Larsson = Ларссон, Карл Улоф

How this looks in Bulgarian Wikipedia for Carl Larsson = Карл Ларсон

A guess why we have this problem with "Strings" in Europana

- Swedish museum Gävleborgs Länsmuseum is doing an excellent work tracking objects that belongs to photographer Carl Larsson = Wikidata Q5937128

- Gävleborgs Länsmuseum upload its data to Digitalmuseum and we can easy find then photographer Carl Larsson = Wikidata Q5937128 objects using the Authority 48fd203b-2b93-4b0e-89a4-64e0a4509ce0 we dont do name matching --> we have control of the data

- Objects from the Swedish Digitalmuseum database are uploaded to Europeana and under this process there is no entity management instead we start use Text strings i.e. "Carl Larsson" is not uniquely identifying if a person is

- same as Wikidata Q5937128

- or same as Wikidata Q187310

they are both stored in the system as text string "Carl Larsson" and we have a very big metadatadebt as basic things like copyright can be based on the creators death date loosing the control of who is who is also loosing the control of many other parameters and you need to ask the original source if they have control.... (hopefully you can identify the record in the original system)

In this case the Europeana data is useless for understanding "who is who"

- My guess is that the Europeana people has no understanding that in Sweden we can have more people with the same name Carl Larsson and "merging" all Carl Larsson and call them

Europeana agent/base/60886 is something we are glad banks dont do ;-) --> we have a mess

- Small test of the Europeana data ...

- maybe filter on date as the painter Carl Larsson died January 22, 1919 and the photographer Carl Larsson had a company that delivered in his name later than that date

- Most of the items from Gävleborgs Länsmuseum is not the painter see test search with filter "proxy_dc_publisher:"Länsmuseet%20Gävleborg" --> 43,900 objects

- another test filter on aggregator

f[PROVIDER][]=Swedish+Open+Cultural+Heritage+|+K-samsök

could work but as our Carl Larsson were active during the same period and worked just 75 km from each other I guess the aggregator filtering will not help us....

The Solution

- Better system for communication and tracking errors

- Better skills when handling entities

As Wikipedia is now moving also the project Wikicommons in direction to Linked data this challenge will expload --> today organisations need to step up and add new skills if they will avoid issues as above and use the new technology. I guess we need

- Entity change management

- If we try to have Linked data roundtrip we also need to synchronize entities

About structured data on commons a solution with Linked data describing pictures

Some definitions

- Linked data roundtrip: the process when a picture is uploaded to Wikicommons and linked data is added to the picture and you would like to feed this metadata back to the original uploading system.

As we now have Linked data --> we also need to have entity data management and synchronization changes to the linked data entities themselve

- Metadatadebt: compare technical debt when the metadata we manage lacks quality we have to pay a price for correcting it. Above I guess Europeana needs to reload all metadata and set up entity data management between Europeana and all Europeana aggregators and the providers

An example of the cost paid by Europeana is that In the example above Europeana missed the opportunity to get linked from 140 000 articles in en:Wikipedia because of Entity metadatadebt. As en:Wikipedia has > 15 B monthly page views I guess its an insane "debt" and lesson learned fee the Europeana people has to pay.

Today I feel the Europeana community tries to hide this problem as we see no emergency actions see

- T243764 "en:Wikipedia <-> Europeana Entity has problem with the quality of Europeana Linked data

- Entity data management

- When handling linked data we need to have change management also on the linked data objects.

if we should do Linked data roundtrips on pictures uploaded we need to find a way how to synchronize objects used in the two linked data domains or do ontology reuse. Wikicommons has chosen to reuse the Wikidata ontology i.e. a change in Wikidata will directly be reflected in Wikicommons. See also the OCLC report "Creating Library Linked Data with Wikibase" and "Lesson 5: To populate knowledge graphs with library metadata, tools that facilitate the reuse and enhancement of data created elsewhere are recommended"

Steps I thinks is getting more and more imoiortant as entity management also will be the dem facto standard for metadata in pictures

- pictures needs to have unique persistent identifiers

- when uploading a picture we need to be able to track it

- metadata added to a picture

- metadata deleted

- if this picture is downloaded to another platform

- Example of how Wikicommons now use Wikibase and have unique identifiers with linked metadata available in JSON

- example picture XLM.BE0228 = Digitalmuseum 021016619473

- uploaded to Wikicommons

as File:XLM.BE0228_Sj%C3%A4landerska_kollegiet...

- metadata in JSON Special:EntityData/M87692341.json

- My guess is with this new complexity handling entity management and linked data between loosely coupled system the complexity for the end user will increase and we need to design better User interfaces "hiding" this complexity for the end users

Today in Wikicommons we import pictures and retype the metadata

- with metadata data that is entities like Q87101582 Q30312943 this is not possible for the end user to retype

we need people designing digital archives to sit down and define an infrastructure that supports an user interface for the end user with "drag-and-drop" that takes care of - controlling the copyright of the picture

- translate entities from one platform to the users current platform

- tells the user that this picture is said to depict person xxx that is yyy on the "old platform" do you want to create this entity as same as external ID yyy

- tweet about this #Metadatadebt

Example of #Metadatadebt in #Europeana and how a person called "Carl Larsson" is not always same as "Carl Larsson"https://t.co/G5nbFQLQb0#LinkedData needs #EntityChangeManagement and #linkedpeople pic.twitter.com/6KC490RitK— Magnus Sälgö (@salgo60) March 9, 2020

- tweet how I see #metadatadebt

— Magnus Sälgö (@salgo60) March 10, 2020

- tracking this issue see T240809#5777845 Structured Data: How can GLAMs grab the low hanging fruit?

- example JSON of a Europeana record that is wrong connected see blogpost

- we need to measure metadata quality see T237989#6025431

- Github Europeana QA Spark Antoine Isaac of Europeana about the simple linking they do 2015

- Structured Data: How can GLAMs grab the low hanging fruit? video

- Europeana We want better data quality: NOW! april 30 2015

- Europeana Innovating metadata aggregation in Europeana via linked data

- Europeana strategy 2020-2025

- status I understand is

- willingness is high to use Linked data

- but Organizational capability is not

Very nice post

SvaraRadera